Let's cut through the AI hype cycle.

Every week there's a new "revolutionary" announcement, a new benchmark being smashed, a new startup claiming to have solved AGI. It's exhausting — and most of it doesn't matter for anyone actually building products.

So here's what actually matters, backed by hard numbers from McKinsey, Gartner, PwC, and other organizations that measure real-world adoption.

Top Generative AI trends going into 2026:

- AI agents go enterprise — 79% adoption, 171% expected ROI

- Domain-specific models take over — 50%+ of enterprise GenAI by 2028

- Multimodal becomes the default — 40% of GenAI will be multimodal by 2027

- AI video generation matures — Runway Gen 4.5 beats Sora 2, market hits $1.18B by 2029

- Small models beat giants — 75% of enterprise data at the edge by 2025

- RAG becomes the enterprise standard — 71% of GenAI adopters now use RAG

- AI governance gets real — EU AI Act enforcement begins, $40M fines active

Let's get into it.

1. AI Agents Are Going Enterprise — And It's Happening Fast

If 2024 was the year everyone talked about AI agents, 2025 is the year companies actually started deploying them.

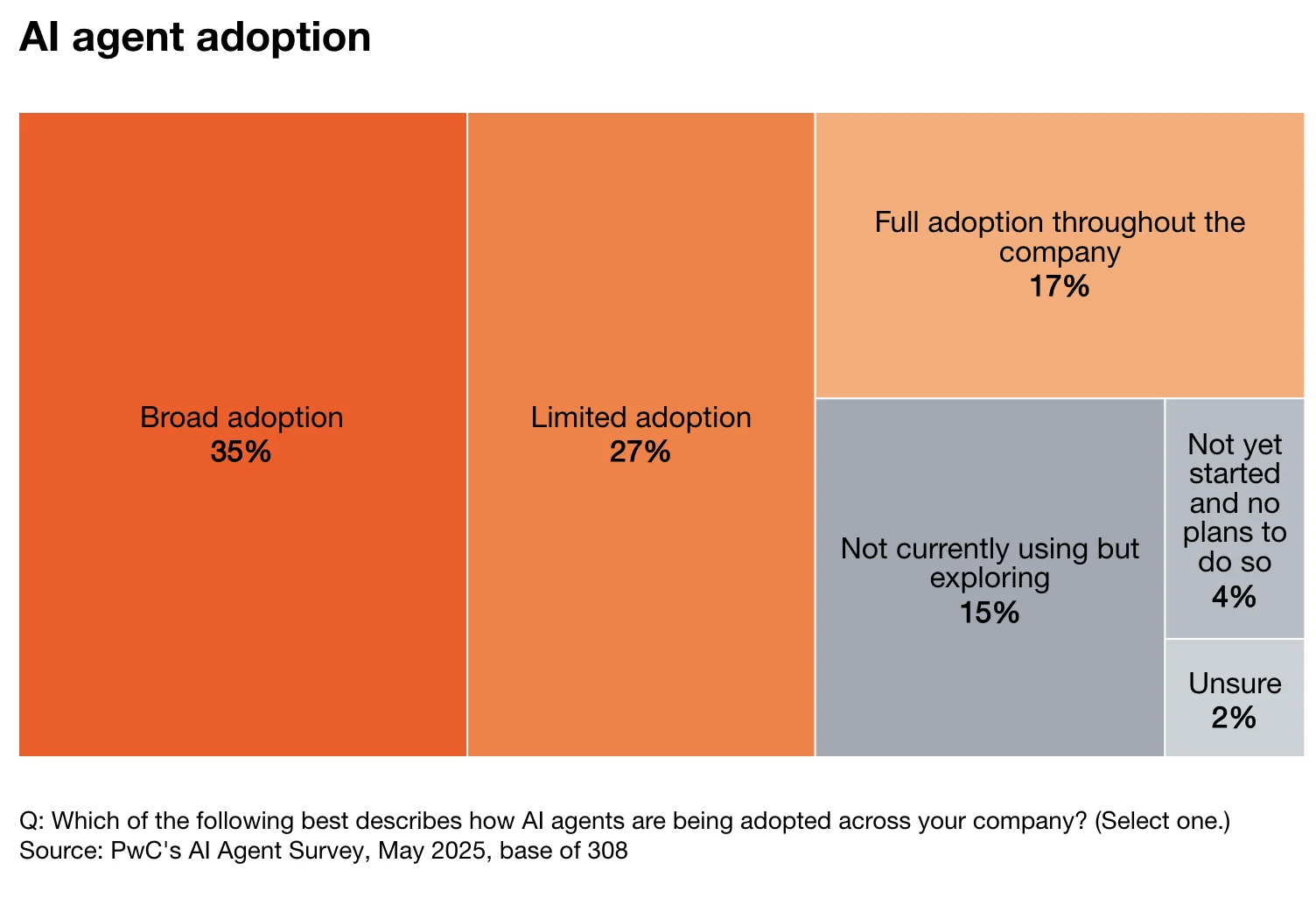

According to PwC's May 2025 survey of 300 senior executives, 79% say AI agents are already being adopted in their companies. And they're not just experimenting — 88% plan to increase AI-related budgets in the next 12 months specifically because of agentic AI capabilities.

McKinsey's 2025 State of AI report adds more context: 23% of organizations are already scaling agentic AI systems somewhere in their enterprises, with an additional 39% experimenting with AI agents. That's 62% of organizations at least dipping their toes in.

The ROI expectations are wild. Organizations project an average return of 171% on agentic AI deployments. U.S.-based companies are even more bullish, estimating returns of 192%.

Source: PwC AI Agent Survey 2025

What's happening in the real world

Salesforce launched Agentforce in late 2024, their autonomous AI agent platform. According to their announcements, these agents can handle customer service inquiries, qualify sales leads, and manage campaigns without human intervention — executing multi-step tasks across their CRM ecosystem. (For how this is transforming marketing specifically, see our digital marketing trends for 2026.)

Microsoft has embedded agents throughout Copilot. Their 365 Copilot can now orchestrate across apps, schedule meetings based on email context, pull data from multiple sources to answer questions, and automate entire workflows that previously required human coordination.

Anthropic released Claude's computer use capability, allowing their AI to interact directly with desktop applications — clicking buttons, filling forms, navigating software. It's early, but it signals where things are heading: AI that doesn't just generate text, but actually operates software.

Gartner predicts that 40% of enterprise applications will feature task-specific AI agents by 2026 — up from less than 5% in 2025. That's an 8x jump in a single year.

Where this is heading

The agent market is projected to hit $103.6 billion by 2032, up from $3.7 billion in 2023. That's a 45.3% CAGR — nearly doubling every two years.

But here's the catch: most organizations aren't agent-ready. The infrastructure isn't there. APIs aren't exposed properly. Data isn't accessible. The companies that get agents right in 2026 will be the ones that did the boring work of making their systems interoperable first.

2. Domain-Specific AI Models Are Taking Over

The era of one-size-fits-all AI is ending. Enterprises are abandoning the plug-and-play ChatGPT approach for something more powerful: AI models trained specifically for their industry, their workflows, their data.

Gartner predicts that by 2028, over 50% of GenAI models used by enterprises will be domain-specific — up from less than 5% in 2023. That's not a gradual shift. That's a complete rewiring of how companies deploy AI.

Why the rush? General-purpose models are impressive but inefficient. They hallucinate on specialized terminology. They miss industry nuances. They require expensive prompt engineering to get right. Domain-specific models solve all three problems — with higher accuracy, better compliance, and lower costs.

According to TechTarget's analysis, organizations have been moving away from generic deployments and instead building "vertical applications of training models, using GenAI engines for organization-, industry- and workflow-specific applications."

What's happening in the real world

Healthcare is leading the charge. Clinical AI models trained on medical literature, patient records, and diagnostic guidelines outperform general models on medical tasks — without the dangerous hallucinations that make GPT-4 unsuitable for clinical decisions. Companies like Hippocratic AI and Google's Med-PaLM are purpose-built for healthcare.

Legal services followed quickly. Law firms can't afford AI that invents case citations. Domain-specific legal models trained on verified case law, statutes, and regulatory documents deliver accuracy that general models simply can't match. Harvey AI raised $80 million specifically to build legal-focused AI.

Financial services see massive ROI. Models trained on financial instruments, market data, and compliance regulations understand context that general models miss entirely. Bloomberg's BloombergGPT — trained on decades of financial data — demonstrated that domain expertise beats raw scale.

Manufacturing and engineering are next. AI models trained on CAD files, technical specifications, and maintenance logs can interpret blueprints and troubleshoot equipment in ways that general models fail at completely.

Where this is heading

The playbook is becoming clear: start with a foundation model, fine-tune on your industry data, deploy for your specific use cases. The companies winning aren't the ones with the biggest models — they're the ones with the best training data for their domain.

Expect a proliferation of industry-specific AI vendors. Healthcare AI, legal AI, financial AI, manufacturing AI — each vertical will have specialized players who understand the nuances that horizontal platforms miss.

The question isn't "which foundation model should we use?" anymore. It's "who has the best data and expertise for our specific industry?"

3. Multimodal AI Becomes the Default

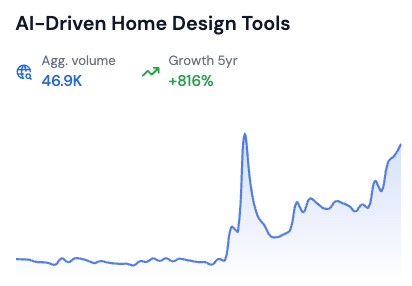

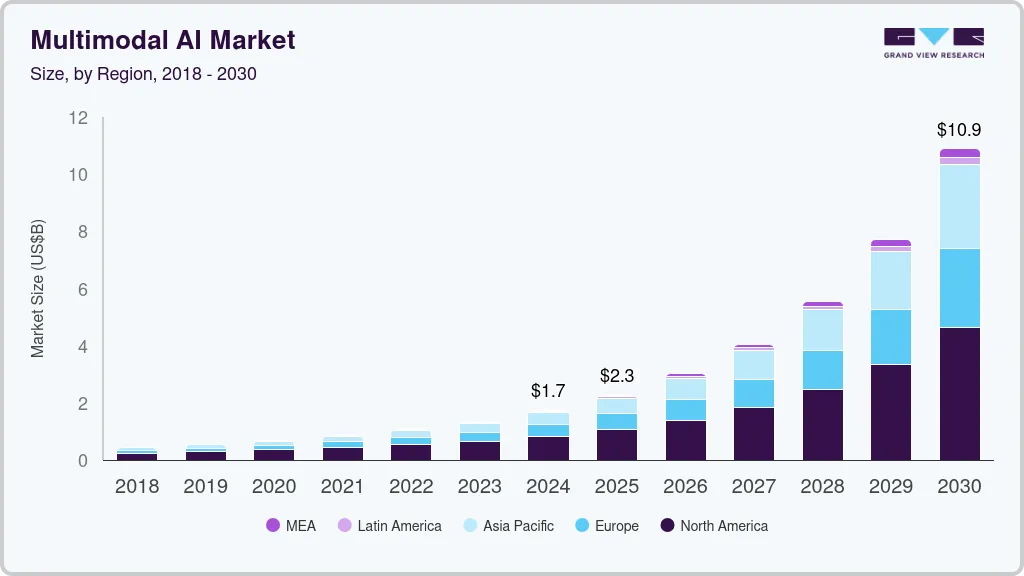

The days of text-only AI are numbered. According to Gartner predictions, 40% of generative AI solutions will be multimodal by 2027 — up from just 1% in 2023.

The multimodal AI market was valued at $1.73 billion in 2024 and is projected to reach $10.89 billion by 2030, growing at a CAGR of 36.8%. Some projections put it even higher — up to $20.58 billion by 2032.

Source: Grand View Research

This isn't just about models that can "see" images. Modern multimodal systems process text, images, video, audio, and structured data simultaneously — understanding the relationships between them.

What's happening in the real world

GPT-4o (the "o" stands for "omni") was the watershed moment. A single model that could see, hear, and speak — with real-time voice conversations that felt natural. The latency dropped from seconds to milliseconds.

Google's Gemini 2 doubled down on native multimodality. Rather than bolting different capabilities together, Gemini was trained from the ground up on mixed-modality data. The result: more coherent understanding of how text, images, and audio relate.

Claude 3 brought sophisticated document understanding. Upload a complex PDF with charts, tables, and text? Claude can analyze all of it in context, extracting insights that require understanding the visual layout alongside the words.

Healthcare is seeing rapid adoption. Multimodal AI can analyze medical images alongside patient notes, combining visual diagnosis with clinical context. Retail uses it for visual search combined with natural language queries. Manufacturing combines sensor data with image analysis for quality control.

Where this is heading

North America dominated with 48% market share in 2024, but Asia Pacific is growing fastest — driven by massive investments from Chinese tech giants and government-funded language-specific models.

The next frontier is real-time multimodal understanding. AI that can participate in video calls, understand shared screens, respond to gestures, and maintain context across all modalities simultaneously. We're not there yet, but the pieces are falling into place.

4. AI Video Generation Finally Matures

2025 was the year AI video stopped being a novelty and started being useful.

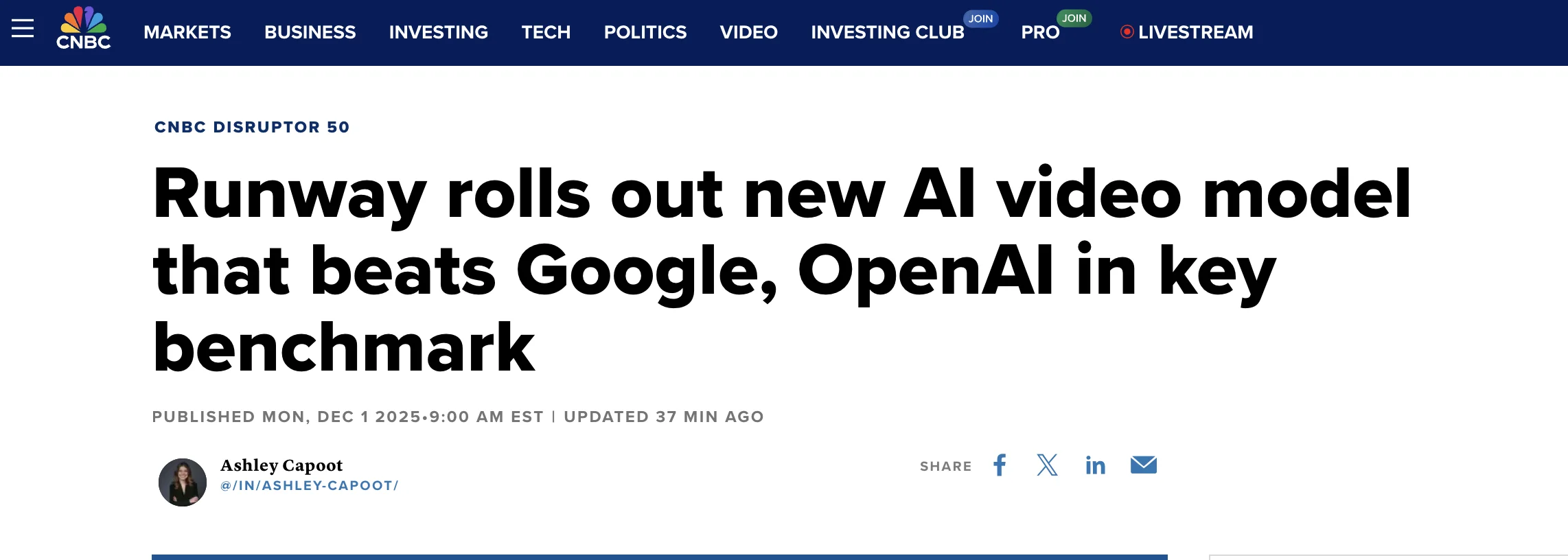

The text-to-video AI market is projected to grow from $0.31 billion in 2024 to $1.18 billion by 2029 — a 29.5% CAGR. But the numbers don't capture how dramatically the quality improved.

As of December 2025, Runway's Gen 4.5 holds the #1 spot on the Video Arena leaderboard (maintained by Artificial Analysis, an independent AI benchmarking company). Google's Veo 3 sits in second place. OpenAI's Sora 2 Pro? Seventh.

Source: CNBC / Artificial Analysis

Runway's model understands physics, human motion, camera movements, and cause-and-effect in ways that seemed impossible a year ago.

What's happening in the real world

OpenAI's Sora 2 brought video generation to consumers through ChatGPT Plus ($20/month) and Pro ($200/month). The accessibility changed everything — suddenly marketing teams, content creators, and indie filmmakers could experiment without massive production budgets.

Runway focused on professional workflows. Their Gen-3 and Gen-4 models offer precise camera motion control, masking tools, and image-to-video pipelines that integrate with traditional editing software. They're building Premiere Pro and DaVinci Resolve plugins.

Google's Veo 3 pushed quality boundaries, particularly in realistic human faces and complex scene composition. The competition is driving rapid improvement across all platforms.

The use cases that took off weren't the flashy ones. Product visualization — showing products in different contexts without physical photography. Explainer videos — generating visual demonstrations for complex concepts. Storyboarding — rapidly iterating on creative concepts before committing to full production.

Where this is heading

The 60-second limit is the next barrier to break. Current models struggle with coherence over longer durations. Expect this to extend to several minutes by late 2026.

Real-time generation is coming. Imagine AI that can generate video during live events, personalizing content for different viewers. The advertising implications are enormous — and slightly terrifying.

Pricing is dropping fast. Runway offers a free tier now. As competition intensifies, expect professional-grade video generation to become accessible to anyone with a creative vision and a laptop.

5. Small Language Models Are Outperforming Giants

Here's the counterintuitive trend: while everyone chases bigger models, enterprises are discovering that smaller is often better.

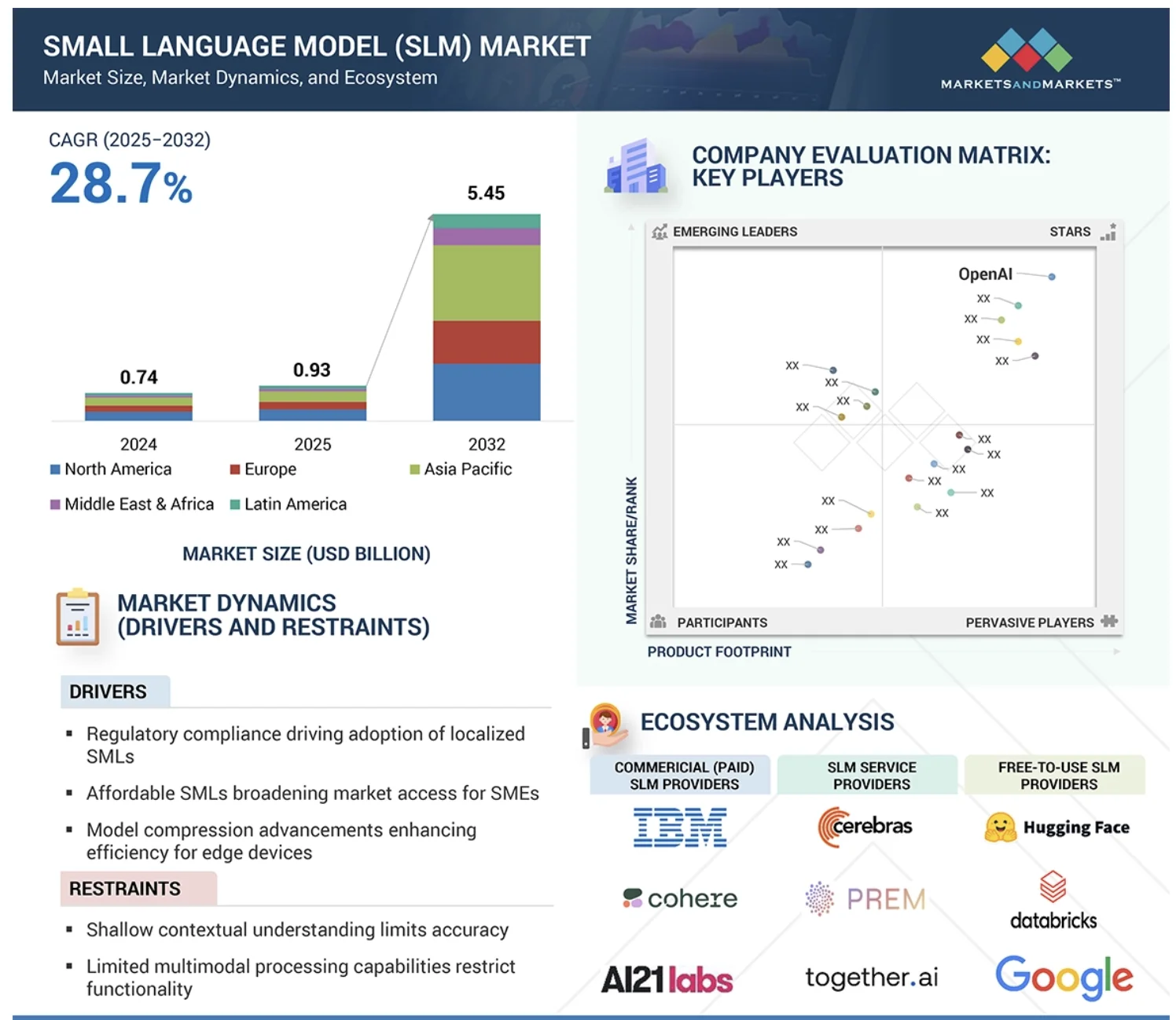

The small language model market is projected at $0.93 billion in 2025, growing to $5.45 billion by 2032 at a 28.7% CAGR. That growth is driven by a simple reality: you can't send sensitive enterprise data to external APIs, and you can't run 175-billion-parameter models on edge devices.

Source: MarketsandMarkets

According to World Economic Forum analysis, 75% of enterprise data will be processed at the edge by 2025. That's data that never leaves the device, never hits the cloud, never creates compliance headaches.

Small language models (typically under 10 billion parameters) can run locally on phones, laptops, IoT devices, and on-premises servers — while still delivering impressive performance for specific tasks.

What's happening in the real world

Microsoft has led the charge with their Phi series. In December 2024, they released Phi-4, which outperforms comparable and larger models on math-related reasoning despite its smaller size. In July 2025, they launched Mu — a 330-million-parameter model optimized for on-device deployment on Copilot+ PCs.

Apple made on-device AI a flagship feature. Their latest devices run language models locally, enabling features like advanced autocomplete, image understanding, and voice transcription without internet connectivity.

NVIDIA researchers published findings suggesting small language models could become the backbone of next-generation intelligent enterprises — particularly for agentic AI applications where you need many models running simultaneously.

The business case is compelling:

- Lower costs — No API fees, no cloud compute charges

- Lower latency — Local processing in milliseconds

- Better privacy — Data never leaves the device

- Regulatory compliance — Easier to meet data sovereignty requirements

- Reliability — No dependency on external services

Where this is heading

Domain-specific SLMs are the killer app. A small model fine-tuned for your specific use case often outperforms a general-purpose giant. Healthcare organizations are training models on medical literature. Financial firms are building models that understand their specific instruments and terminology.

Expect a bifurcation: frontier models for complex reasoning and creative tasks, small models for everything that needs to be fast, private, or embedded. The winning strategy isn't choosing one — it's knowing when to use each.

6. RAG Becomes the Enterprise Standard

Retrieval-Augmented Generation went from buzzword to baseline in 2025.

The RAG market hit $1.2 billion in 2024 and is projected to reach $11.0 billion by 2030 — a 49.1% CAGR. But adoption metrics tell a clearer story.

According to a Snowflake report, 71% of early GenAI adopters are implementing RAG to ground their models. And 96% are doing some kind of fine-tuning or augmentation — almost nobody deploys base models directly anymore.

Source: Deloitte

Why the rush? Because hallucinations destroy enterprise trust. When your AI confidently fabricates legal citations or invents product specifications, the consequences are real. RAG addresses this by forcing models to retrieve relevant documents before generating responses.

What's happening in the real world

The numbers on hallucination reduction are dramatic. Field studies record hallucination reductions between 70% and 90% when RAG pipelines are introduced properly. Another analysis found RAG cuts hallucinations by 71% when used properly.

Legal services were early adopters. Law firms can't afford AI that invents case citations. RAG systems that pull from verified legal databases made AI suddenly viable for contract review, research, and document drafting.

Healthcare followed quickly. Medical AI grounded in peer-reviewed literature and clinical guidelines is far more trustworthy than AI that might be confusing a pharmaceutical's name with a Pokemon.

Financial services saw some of the highest ROI. The industry's strict compliance requirements made RAG essential, and organizations report 4.2x returns on their GenAI investments when properly implemented.

The shift is visible in how enterprises allocate use cases: 30-60% of enterprise AI implementations now use RAG, particularly wherever the use case demands high accuracy, transparency, and reliable outputs.

Where this is heading

"Agentic RAG" is the 2026 buzzword — combining autonomous agents with retrieval systems. Instead of just retrieving documents and generating responses, these systems can decide what to search for, evaluate relevance, and iteratively refine their research.

But there's warranted caution. Analysts note that mistakes in agentic chains have more detrimental impact — a bad retrieval early in the chain compounds through every subsequent step.

The infrastructure investments happening now (vector databases, embedding models, chunking strategies) will pay dividends for years. RAG isn't a trend that's going away.

7. AI Governance Gets Real — And Expensive

The party's over for "move fast and break things" AI deployment. 2025 marked the beginning of serious AI regulation enforcement.

The EU AI Act's first prohibitions took effect on February 2, 2025 — banning AI systems deemed to pose unacceptable risks. On August 2, 2025, governance rules and obligations for General Purpose AI models became mandatory.

The penalties are not theoretical. Using prohibited AI practices can result in fines of up to €40 million or 7% of worldwide annual turnover — whichever is higher. Even less severe violations carry fines up to €20 million or 4% of turnover.

According to the 2025 State of Data Compliance and Security Report, 23% of global organizations already need to comply with the EU AI Act. And this is just the beginning.

What's happening in the real world

Compliance is forcing real changes. Organizations must now provide detailed technical documentation, implement robust risk management systems, and ensure human oversight for high-risk AI applications. Formal conformity assessments by designated authorities are mandatory.

The "high-risk" classification matters. A study of 106 enterprise AI systems found that 18% were high-risk, 42% were low-risk, and 40% were unclear whether high or low risk. That ambiguity is forcing legal teams to make conservative interpretations.

Global regulation is spreading. Stanford University noted that legislative mentions of AI rose 21.3% across 75 countries since 2023 — a ninefold increase since 2016. Brazil, China, Japan, Singapore, and the UAE are all introducing complementary measures that establish a more defined global baseline.

Despite the stakes, the McKinsey report found something troubling: fewer than half of organizations report taking concrete steps to mitigate AI risks, even for the most urgent threats. There's a gap between awareness and action.

68% of organizations are worried about privacy and compliance audits related to AI. And 86% plan to invest in AI data privacy solutions over the next 1-2 years. The compliance budget is becoming a real line item.

Where this is heading

By August 2026, each EU Member State must establish at least one AI regulatory sandbox at the national level. These sandboxes will let companies test AI systems in controlled environments before full deployment — essentially a staging environment for regulatory compliance.

The winners in this environment aren't necessarily the most innovative — they're the ones who built compliance into their AI development process from day one. Documentation, audit trails, explainability, and human oversight aren't afterthoughts anymore.

Expect AI governance tools to become their own category. Automated compliance checking, bias detection, documentation generators, risk assessment frameworks — the boring infrastructure of responsible AI is suddenly a market opportunity.

The Common Thread

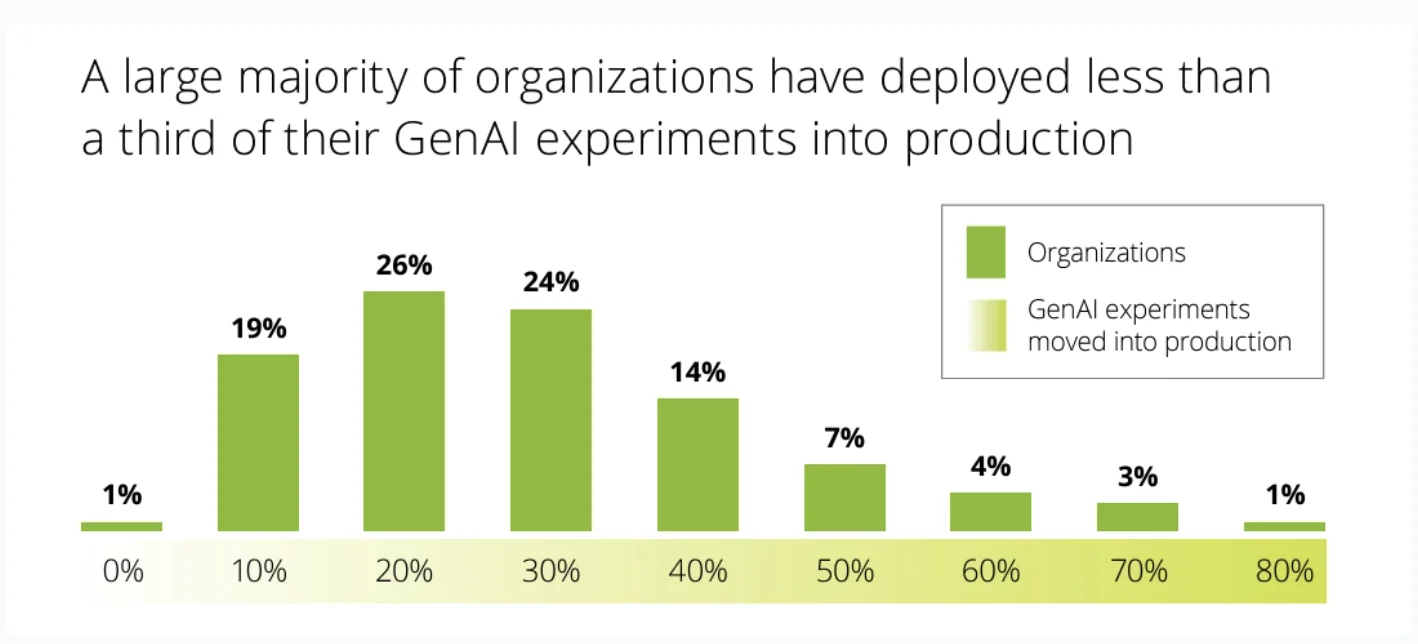

Look across these seven trends and a pattern emerges: the generative AI market is growing up.

Early AI adoption was about experimentation — what can this technology do? 2026 adoption is about integration — how do we make this technology work reliably, safely, and at scale?

AI agents move from demos to production when they have the APIs, data access, and guardrails to operate safely. Domain-specific models win because they understand your industry better than any general-purpose giant. Multimodal becomes useful when it's integrated into workflows, not shown off in keynotes. RAG wins because it solves the trust problem. Small models win because they solve the deployment problem. Governance wins because it solves the liability problem.

The companies thriving in this environment share a characteristic: they're not chasing the newest model announcement. They're doing the unglamorous work of making AI actually work — building pipelines, establishing governance, measuring real outcomes, and iterating based on what they learn.

If 2024 was "can AI do this?" and 2025 was "should we do this with AI?", 2026 is "how do we do this with AI responsibly and at scale?"

The organizations that answer that question well won't just adopt AI. They'll be transformed by it.

Want to spot emerging AI trends before they hit mainstream? Check out our guide on how to identify market trends or explore what's gaining traction on our trends dashboard.