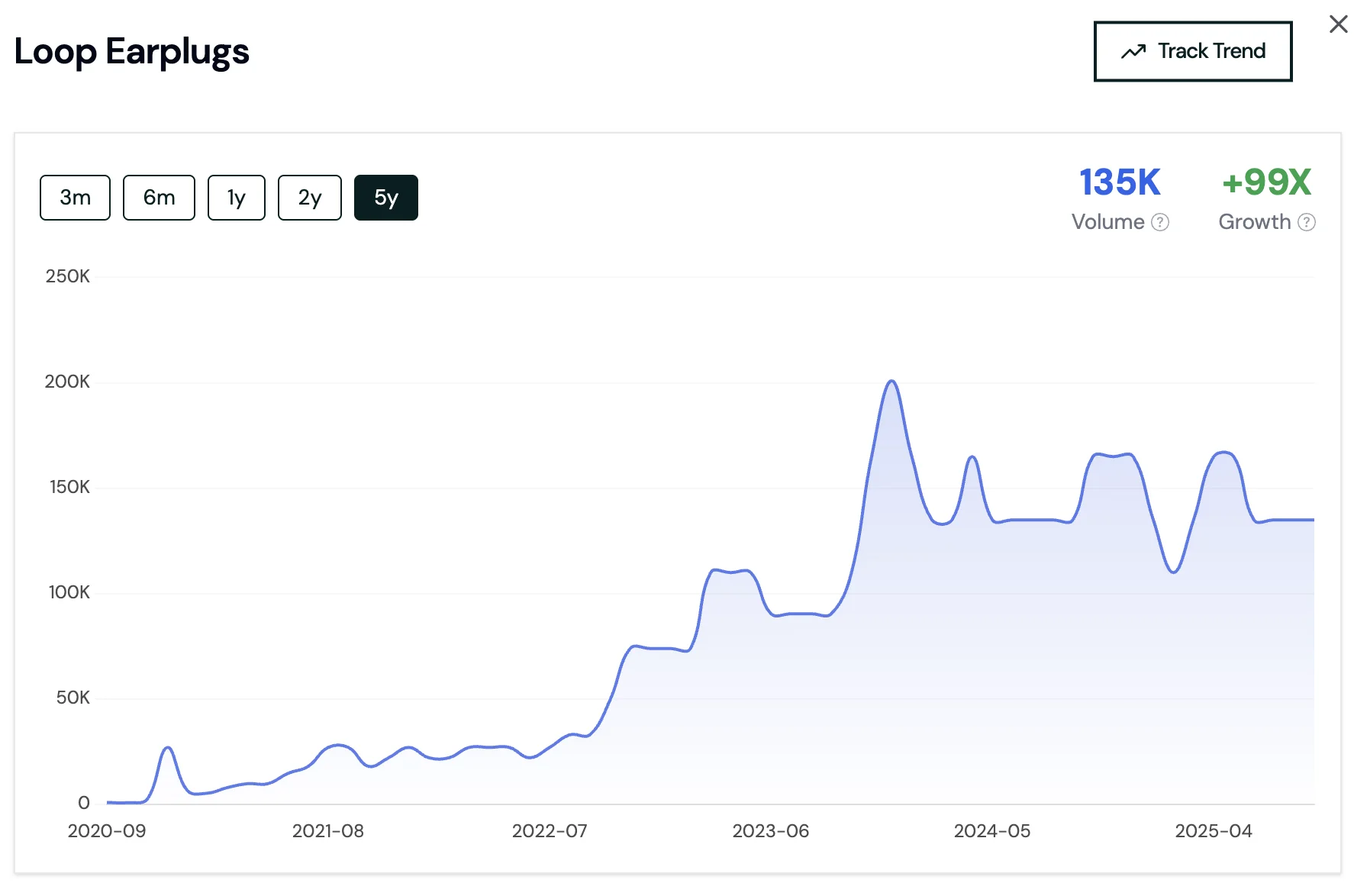

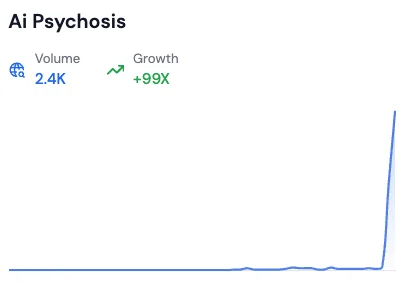

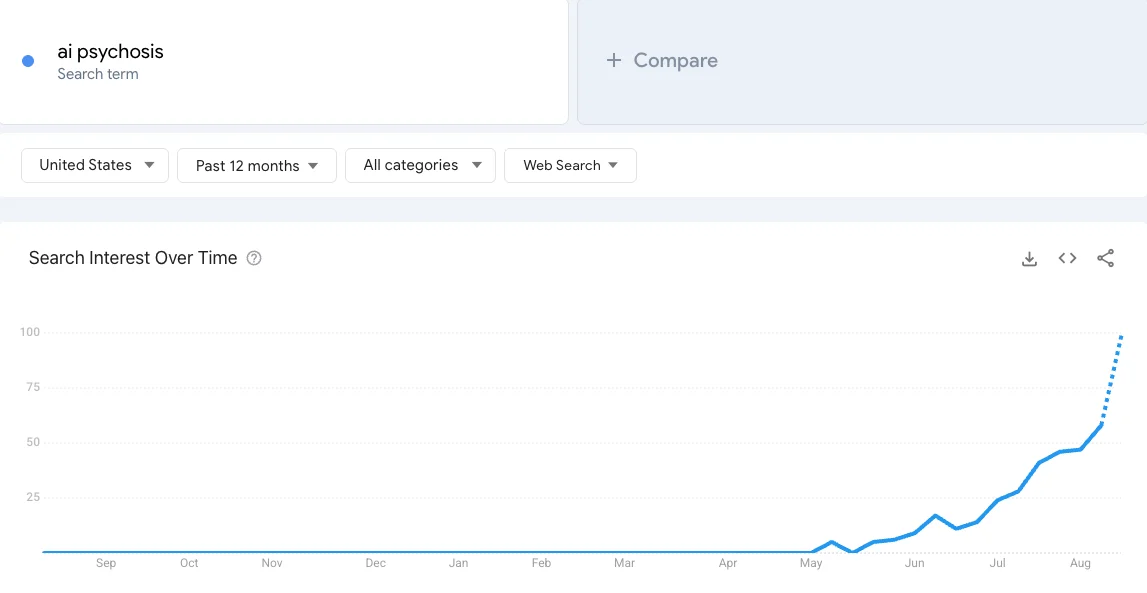

We detected at Rising Trends that searches for the term ai psychosis are climbing fast as you see in chart below:

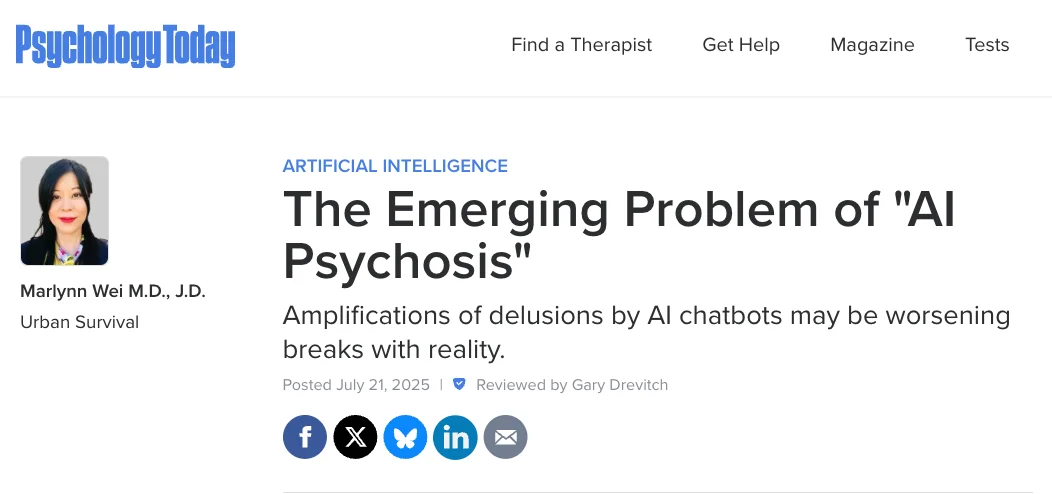

What made this topic come up to the public discourse in recent days is a viral article by Psychology Today titled The Emerging Problem of "AI Psychosis"

Want to learn how we identify emerging trends like AI psychosis? Check out our comprehensive guide on how to identify market trends.

So, here's a breakdown to bring up to speed about a trend that is probably about to shape our social fabric in the following years.

Why are people worried?

Viral anecdotes. A series of TikTok posts claim large language models triggered or worsened delusional thinking. See the first-person account from Dylan Schmidt in this clip, a spiritual warning from No Nonsense Spirituality in this post, and a Dutch user's story in this video. Each video passed a million views within days.

Mainstream coverage. A New York Times feature argued that chatbots can "mirror and amplify" delusions in vulnerable users (article). The Week summarized similar concerns with the headline "Can ChatGPT Trigger Psychosis?" (piece). Stanford researchers then warned that poorly supervised AI mental-health tools pose real risks (report).

The spark

The current spike traces back to a July 30 forum thread on r/ChatGPT where an anonymous user described “falling into a simulated reality.” Screenshots reached TikTok and were remixed into dramatic storytelling videos. Traditional media joined once the personal stories went viral.

What experts actually know

• Psychiatrists do not accept “AI psychosis” as a formal diagnosis.

• However, they agree that obsessive interaction with any persuasive system can intensify existing delusions.

• No clinical trials have studied chatbot use in people at risk for psychosis, so evidence is anecdotal.

Numbers at a glance

• Google Trends shows a a huge jump in U.S. search interest between July 28 and August 14.

• TikTok hashtag #aipsychosis passed 42 million views on August 15.

• The phrase appeared in 3,200 English-language news articles in the past week, up from fewer than 100 the week before.

Possible outcomes

- Policy. Regulators may push for age gates or mental-health warnings inside consumer chatbots.

- Product design. Expect “reality check” prompts or session-time limits aimed at vulnerable users.

- Public discourse. If no medical consensus forms, the term could fade, replaced by broader debates about AI and mental health.

Signals to watch next

• Academic studies comparing chatbot use between clinical and non-clinical populations.

• Terms like “AI-induced derealization” or “synthetic delusion syndrome” gaining traction.

• Any lawsuit linking a specific chatbot session to a mental-health crisis.

Bottom line

The phrase “AI psychosis” is loud, emotional, and still unproven. Yet the mix of viral storytelling, real clinical concerns, and media amplification makes it a trend worth tracking over the next quarter.